The rapid growth of generative AI technology has been a catalyst for business productivity growth, creating new opportunities for greater efficiency, enhanced customer service experiences, and more successful customer outcomes. Today’s generative AI advances are helping existing technologies achieve their long-promised potential. For example, voice-first applications have been gaining traction across industries for years—from customer service to education to personal voice assistants and agents. But early versions of this technology struggled to interpret human speech or mimic real conversation. Building real-time, natural-sounding, low-latency voice AI has until recently remained complex, especially when working with streaming infrastructure and speech foundation models (FMs).

The rapid progress of conversational AI technology has led to the development of powerful models that address the historical challenges of traditional voice-first applications. Amazon Nova Sonic is a state-of-the-art speech-to-speech FM designed to build real-time conversational AI applications in Amazon Bedrock. This model offers industry-leading price-performance and low latency. The Amazon Nova Sonic architecture unifies speech understanding and generation into a single model, to enable real, human-like voice conversations in AI applications.

Amazon Nova Sonic accommodates the breadth and richness of human language. It can understand speech in different speaking styles and generate speech in expressive voices, including both masculine-sounding and feminine-sounding voices. Amazon Nova Sonic can also adapt the patterns of stress, intonation, and style of the generated speech response to align with the context and content of the speech input. Additionally, Amazon Nova Sonic supports function calling and knowledge grounding with enterprise data using Retrieval-Augmented Generation (RAG). To further simplify the process of getting the most from this technology, Amazon Nova Sonic is now integrated with LiveKit’s WebRTC framework, a widely used platform that enables developers to build real-time audio, video, and data communication applications. This integration makes it possible for developers to build conversational voice interfaces without needing to manage complex audio pipelines or signaling protocols. In this post, we explain how this integration works, how it addresses the historical challenges of voice-first applications, and some initial steps to start using this solution.

Solution overview

LiveKit is a popular open source WebRTC platform that provides scalable, multi‑user real‑time video, audio, and data communication. Designed as a full-stack solution, it offers a Selective Forwarding Unit (SFU) architecture; modern client SDKs across web, mobile, and server environments; and built‑in features such as speaker detection, bandwidth optimization, simulcast support, and seamless room management. You can deploy it as a self-hosted system or on AWS, so developers can focus on application logic without managing the underlying media infrastructure.

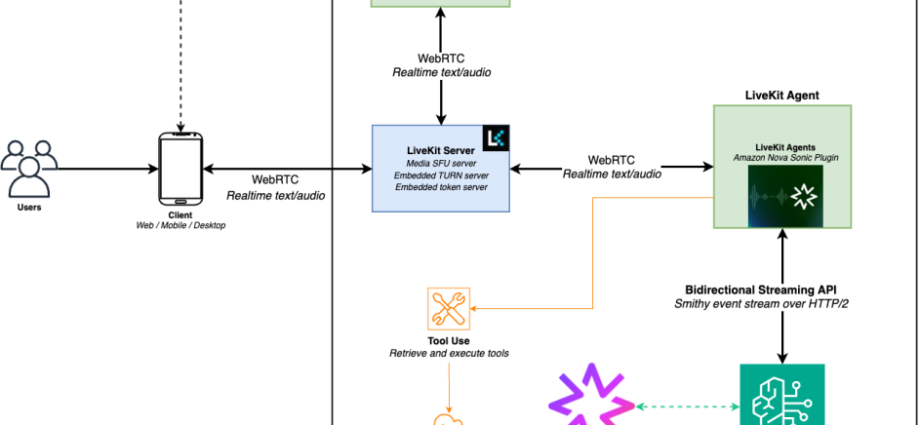

Building real-time, voice-first AI applications requires developers to manage multiple layers of infrastructure—from handling audio capture and streaming protocols to coordinating signaling, routing, and event-driven state management. Working with bidirectional streaming models such as Amazon Nova Sonic often meant setting up custom pipelines, managing audio buffers, and working to maintain low-latency performance across diverse client environments. These tasks added development overhead and required specialized knowledge in networking and real-time systems, making it difficult to quickly prototype or scale production-ready voice AI solutions. To address this complexity, we implemented a real-time plugin for Amazon Nova Sonic in the LiveKit Agent SDK. This solution removes the need for developers to manage audio signaling, streaming protocols, or custom transport layers. LiveKit handles real-time audio routing and session management, and Amazon Nova Sonic powers speech understanding and generation. Together, LiveKit and Amazon Nova Sonic provide a streamlined, production-ready setup for building voice-first AI applications. Features such as full-duplex audio, voice activity detection, and noise suppression are available out of the box, so developers can focus on application logic rather than infrastructure orchestration.

The following video shows Amazon Nova Sonic and LiveKit in action. You can find the code for this example in the LiveKit Examples GitHub repo.

The following diagram illustrates the solution architecture of Amazon Nova Sonic deployed as a voice agent in the LiveKit framework on AWS.

Prerequisites

To implement the solution, you must have the following prerequisites:

- Python version 3.12 or higher

- An AWS account with appropriate Identity and Access Management (IAM) permissions for Amazon Bedrock

- Access to Amazon Nova Sonic on Amazon Bedrock

- A web browser (such as Google Chrome or Mozilla Firefox) with WebRTC support

Deploy the solution

Complete the following steps to get started talking to Amazon Nova Sonic through LiveKit:

- Install the necessary dependencies:

uv is a fast, drop-in replacement for pip, used in the LiveKit Agents SDK (you can also choose to use pip).

- Set up a new local virtual environment:

- To run the LiveKit server locally, open a new terminal (for example, a new UNIX process) and run the following command:

You must keep the LiveKit server running for the entire duration that the Amazon Nova Sonic agent is running, because it’s responsible for proxying data between parties.

- Generate an access token using the following code. The default values for

api-keyandapi-secretaredevkeyandsecret, respectively. When creating an access token for permission to join a LiveKit room, you must specify the room name and user identity.

- Create environment variables. You must specify the AWS credentials:

- Create the

main.pyfile:

- Run the

main.pyfile:

Now you’re ready to connect to the agent frontend.

- Go to https://agents-playground.livekit.io/.

- Choose Manual.

- In the first text field, enter

ws://localhost:7880. - In the second text field, enter the access token you generated.

- Choose Connect.

You should now be able to talk to Amazon Nova Sonic in real time.

If you’re disconnected from the LiveKit room, you will have to restart the agent process (main.py) to talk to Amazon Nova Sonic again.

Clean up

This example runs locally, meaning there are no special teardown steps required for cleanup. You can simply exit the agent and LiveKit server processes. The only cost incurred are the costs of making calls to Amazon Bedrock to talk to Amazon Nova Sonic. After you have disconnected from the LiveKit room, you will no longer incur charges and no AWS resources will remain in use.

Conclusion

Thanks to generative AI, the qualitative benefits long promised by voice-first applications can now be realized. By combining Amazon Nova Sonic with LiveKit’s WebRTC infrastructure, developers can build real-time, voice-first AI applications with less complexity and faster deployment. The integration reduces the need for custom audio pipelines, so teams can focus on building engaging conversational experiences.

“Our goal with this integration is to simplify the development of real-time voice applications,” said Josh Wulf, CEO of LiveKit. “By combining LiveKit’s robust media routing and session management with Nova Sonic’s speech capabilities, we’re helping developers move faster—no need to manage low-level infrastructure, so they can focus on building the conversation.”

To learn more about Amazon Nova Sonic, read the AWS News Blog, Amazon Nova Sonic product page, and Amazon Nova Sonic User Guide. To get started with Amazon Nova Sonic in Amazon Bedrock, visit the Amazon Bedrock console.

About the authors

Glen Ko is an AI developer at AWS Bedrock, where his focus is on enabling the proliferation of open source AI tooling and supporting open source innovation.

Glen Ko is an AI developer at AWS Bedrock, where his focus is on enabling the proliferation of open source AI tooling and supporting open source innovation.

Anuj Jauhari is a Senior Product Marketing Manager at Amazon Web Services, where he helps customers realize value from innovations in generative AI.

Anuj Jauhari is a Senior Product Marketing Manager at Amazon Web Services, where he helps customers realize value from innovations in generative AI.

Osman Ipek is a Solutions Architect on Amazon’s AGI team focusing on Nova foundation models. He guides teams to accelerate development through practical AI implementation strategies, with expertise spanning voice AI, NLP, and MLOps.

Osman Ipek is a Solutions Architect on Amazon’s AGI team focusing on Nova foundation models. He guides teams to accelerate development through practical AI implementation strategies, with expertise spanning voice AI, NLP, and MLOps.