Information theory and machine learning have a profound connection through Claude Shannon’s 1948 groundbreaking paper.

Shannon’s work laid the foundation for modern information theory, and its applications in machine learning have significantly improved in recent years.

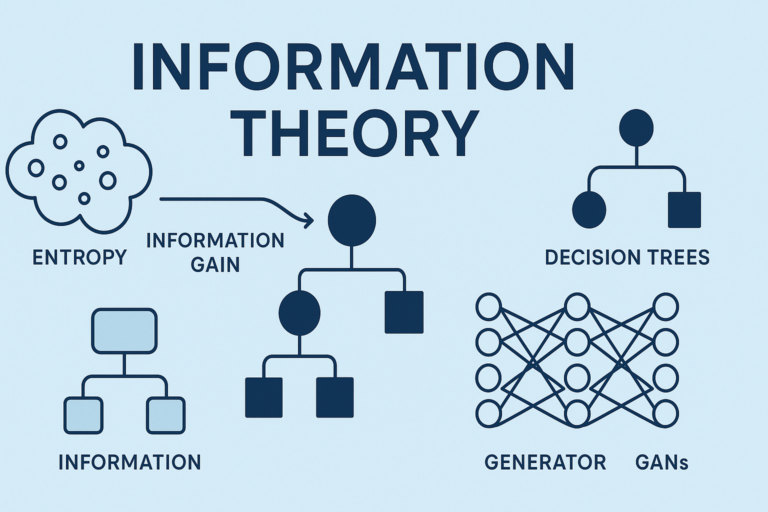

Machine learning relies heavily on information theory to understand and manipulate data. The concept of entropy, which Shannon introduced, is essential in understanding how machines learn from data.

Entropy measures the amount of uncertainty or randomness in a dataset. It is a critical concept in machine learning as it helps researchers understand how data is structured and how it can be manipulated to improve model performance.

The relationship between information theory and machine learning is further strengthened by the concept of mutual information.

Shannon also introduced the concept of data compression, which is essential in machine learning as it helps reduce the dimensionality of data and improve model performance.

Information Theory and Machine Learning

Information theory provides a framework for understanding and analyzing complex data in machine learning. It helps researchers identify patterns and structures in data that can be leveraged to improve model performance.

Machine learning algorithms rely heavily on information theory to understand the relationships between variables and make predictions.

The use of information theory in machine learning has led to significant breakthroughs in areas such as image recognition, natural language processing, and speech recognition.

The relationship between information theory and machine learning is not limited to just theory. It has real-world applications in areas such as image compression, data storage, and communication systems.

The use of information theory in machine learning has also led to the development of new algorithms and techniques that can be used to improve model performance.

Applications of Information Theory in Machine Learning

- Data compression: Reduces the dimensionality of data and improves model performance.

- Mutual information: Helps researchers understand the relationships between variables and make predictions.

- Entropy: Measures the amount of uncertainty or randomness in a dataset.

The use of information theory in machine learning has significant implications for areas such as image recognition, natural language processing, and speech recognition.

Information theory provides a framework for understanding and analyzing complex data in machine learning, and it has led to significant breakthroughs in areas such as data compression and mutual information.

Shannon’s 1948 paper on information theory laid the foundation for modern information theory, and its applications in machine learning have significantly improved in recent years.

The relationship between information theory and machine learning is not limited to just theory, but it has real-world applications in areas such as image compression, data storage, and communication systems.

For more information on machine learning and business strategies, see our article on how machine learning is changing the way businesses operate.

The relationship between information theory and machine learning is further discussed in a research paper by Claude Shannon.

Read the original article on Machine Learning Mastery.