**Thinking Machines Lab Makes Tinker Generally Available: Adds Kimi K2 Thinking And Qwen3-VL Vision Input**

**Introduction**

Thinking Machines Lab has made its Tinker training API generally available, adding three major capabilities, including support for the Kimi K2 Thinking reasoning model, OpenAI compatible sampling, and image input through Qwen3-VL vision language models.

**What is Tinker?**

Tinker is a training API that focuses on large language model fine tuning and hides the heavy lifting of distributed training. It provides a simple Python loop that runs on a CPU-only machine, allowing users to define the data or RL environment, loss, and training logic. The Tinker service maps this loop onto a cluster of GPUs and executes the exact computation specified.

**General Availability and Kimi K2 Thinking**

The December 2025 update marks the end of Tinker’s waitlist, allowing anyone to sign up and access the current model lineup and pricing. Users can now fine tune moonshotai/Kimi-K2-Thinking on Tinker, which is a reasoning model with about 1 trillion total parameters in a mixture of experts architecture.

[**Learn more about fine-tuning large language models with Tinker**](https://thebusinessseries.com/news/how-to-orchestrate-a-fully-autonomous-multi-agent-research-and-writing-pipeline-using-crewai-and-gemini-for-real-time-intelligent-collaboration/)

**OpenAI Compatible Sampling While Training**

Tinker adds a second path that mirrors the OpenAI completions interface, allowing users to sample from in-training checkpoints using a tinker://… model URI through standard OpenAI style clients and tooling.

**Vision Input with Qwen3-VL on Tinker**

Tinker exposes 2 Qwen3-VL vision language models, Qwen/Qwen3-VL-30B-A3B-Instruct and Qwen/Qwen3-VL-235B-A22B-Instruct, which can be used for training and sampling through the same API surface. To send an image into a model, users can construct a ModelInput that interleaves an ImageChunk with text chunks.

[**Discover the latest advancements in AI and machine learning**](https://thebusinessseries.com/blogs/amazon-invests-in-ai-tech-a-10b-deal-emerging/)

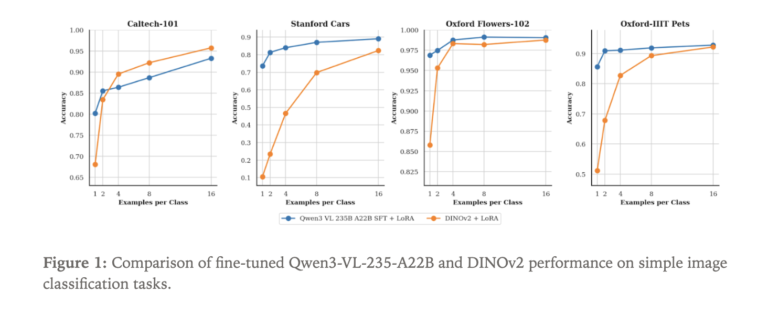

**Experiment: Qwen3-VL Versus DINOv2 on Image Classification**

The Tinker team fine-tuned Qwen3-VL-235B-A22B-Instruct as an image classifier, using 4 standard datasets: Caltech 101, Stanford Cars, Oxford Flowers, and Oxford Pets. They used LoRA adapters within Tinker to train both Qwen3-VL-235B-A22B-Instruct and DINOv2 base models. The experiment swept the number of labeled examples per class, starting from only 1 sample per class and increasing.

**Key Takeaways**

Tinker is now generally available, allowing anyone to sign up and fine-tune open weight LLMs through a Python training loop while Tinker handles the distributed training backend. The platform supports Kimi K2 Thinking, a 1 trillion parameter mixture of experts reasoning model from Moonshot AI, and exposes it as a fine-tunable reasoning model in the Tinker lineup.

[**Learn more about the latest developments in supply chain management**](https://thebusinessseries.com/news/marketing-strategies-effective-supply-chain-management/)

**Conclusion**

Thinking Machines demonstrates that Qwen3-VL 235B, fine-tuned on Tinker, achieves stronger few-shot image classification performance than a DINOv2 base baseline on datasets such as Caltech 101, Stanford Cars, Oxford Flowers, and Oxford Pets, highlighting the data efficiency of large vision language models.

[**Explore the latest advancements in AI and machine learning**](https://thinkingmachines.ai/blog/tinker-general-availability/)

The post Thinking Machines Lab Makes Tinker Generally Available: Adds Kimi K2 Thinking And Qwen3-VL Vision Input appeared first on MarkTechPost.