Generative AI has emerged as a transformative technology in healthcare, driving digital transformation in essential areas such as patient engagement and care management. It has shown potential to revolutionize how clinicians provide improved care through automated systems with diagnostic support tools that provide timely, personalized suggestions, ultimately leading to better health outcomes. For example, a study reported in BMC Medical Education that medical students who received large language model (LLM)-generated feedback during simulated patient interactions significantly improved their clinical decision-making compared to those who did not.

At the center of most generative AI systems are LLMs capable of generating remarkably natural conversations, enabling healthcare customers to build products across billing, diagnosis, treatment, and research that can perform tasks and operate independently with human oversight. However, the utility of generative AI requires an understanding of the potential risks and impacts on healthcare service delivery, which necessitates the need for careful planning, definition, and execution of a system-level approach to building safe and responsible generative AI-infused applications.

In this post, we focus on the design phase of building healthcare generative AI applications, including defining system-level policies that determine the inputs and outputs. These policies can be thought of as guidelines that, when followed, help build a responsible AI system.

Designing responsibly

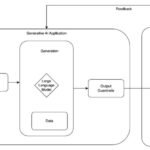

LLMs can transform healthcare by reducing the cost and time required for considerations such as quality and reliability. As shown in the following diagram, responsible AI considerations can be successfully integrated into an LLM-powered healthcare application by considering quality, reliability, trust, and fairness for everyone. The goal is to promote and encourage certain responsible AI functionalities of AI systems. Examples include the following:

- Each component’s input and output is aligned with clinical priorities to maintain alignment and promote controllability

- Safeguards, such as guardrails, are implemented to enhance the safety and reliability of your AI system

- Comprehensive AI red-teaming and evaluations are applied to the entire end-to-end system to assess safety and privacy-impacting inputs and outputs

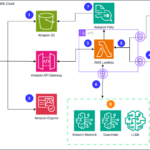

Conceptual architecture

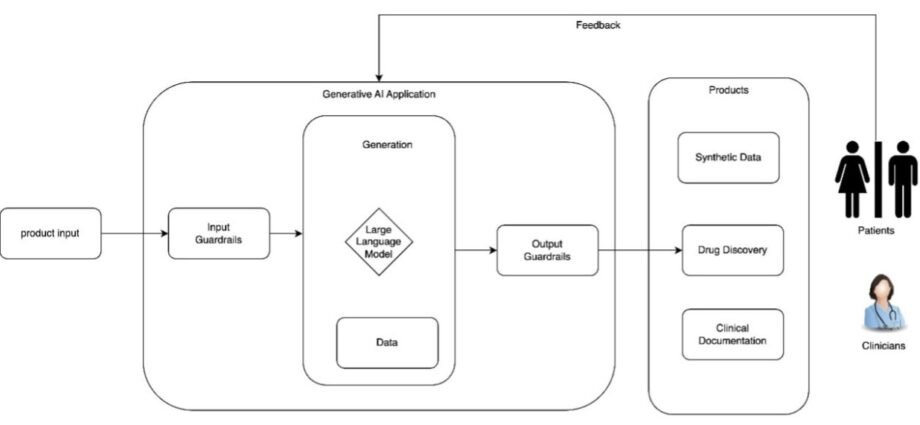

The following diagram shows a conceptual architecture of a generative AI application with an LLM. The inputs (directly from an end-user) are mediated through input guardrails. After the input has been accepted, the LLM can process the user’s request using internal data sources. The output of the LLM is again mediated through guardrails and can be shared with end-users.

Establish governance mechanisms

When building generative AI applications in healthcare, it’s essential to consider the various risks at the individual model or system level, as well as at the application or implementation level. The risks associated with generative AI can differ from or even amplify existing AI risks. Two of the most important risks are confabulation and bias:

- Confabulation — The model generates confident but erroneous outputs, sometimes referred to as hallucinations. This could mislead patients or clinicians.

- Bias — This refers to the risk of exacerbating historical societal biases among different subgroups, which can result from non-representative training data.

To mitigate these risks, consider establishing content policies that clearly define the types of content your applications should avoid generating. These policies should also guide how to fine-tune models and which appropriate guardrails to implement. It is crucial that the policies and guidelines are tailored and specific to the intended use case. For instance, a generative AI application designed for clinical documentation should have a policy that prohibits it from diagnosing diseases or offering personalized treatment plans.

Additionally, defining clear and detailed policies that are specific to your use case is fundamental to building responsibly. This approach fosters trust and helps developers and healthcare organizations carefully consider the risks, benefits, limitations, and societal implications associated with each LLM in a particular application.

The following are some example policies you might consider using for your healthcare-specific applications. The first table summarizes the roles and responsibilities for human-AI configurations.

| Action ID | Suggested Action | Generative AI Risks |

| GV-3.2-001 | Policies are in place to bolster oversight of generative AI systems with independent evaluations or assessments of generative AI models or systems where the type and robustness of evaluations are proportional to the identified risks. | CBRN Information or Capabilities; Harmful Bias and Homogenization |

| GV-3.2-002 | Consider adjustment of organizational roles and components across lifecycle stages of large or complex generative AI systems, including: test and evaluation, validation, and red-teaming of generative AI systems; generative AI content moderation; generative AI system development and engineering; increased accessibility of generative AI tools, interfaces, and systems; and incident response and containment. | Human-AI Configuration; Information Security; Harmful Bias and Homogenization |

| GV-3.2-003 | Define acceptable use policies for generative AI interfaces, modalities, and human-AI configurations (for example, for AI assistants and decision-making tasks), including criteria for the kinds of queries generative AI applications should refuse to respond to. | Human-AI Configuration |

| GV-3.2-004 | Establish policies for user feedback mechanisms for generative AI systems that include thorough instructions and any mechanisms for recourse. | Human-AI Configuration |

| GV-3.2-005 | Engage in threat modeling to anticipate potential risks from generative AI systems. | CBRN Information or Capabilities; Information Security |

The following table summarizes policies for risk management in AI system design.

| Action ID | Suggested Action | Generative AI Risks |

| GV-4.1-001 | Establish policies and procedures that address continual improvement processes for generative AI risk measurement. Address general risks associated with a lack of explainability and transparency in generative AI systems by using ample documentation and techniques such as application of gradient-based attributions, occlusion or term reduction, counterfactual prompts and prompt engineering, and analysis of embeddings. Assess and update risk measurement approaches at regular cadences. | Confabulation |

| GV-4.1-002 | Establish policies, procedures, and processes detailing risk measurement in context of use with standardized measurement protocols and structured public feedback exercises such as AI red-teaming or independent external evaluations. | CBRN Information and Capability; Value Chain and Component Integration |

Transparency artifacts

Promoting transparency and accountability throughout the AI lifecycle can foster trust, facilitate debugging and monitoring, and enable audits. This involves documenting data sources, design decisions, and limitations through tools like model cards and offering clear communication about experimental features. Incorporating user feedback mechanisms further supports continuous improvement and fosters greater confidence in AI-driven healthcare solutions.

AI developers and DevOps engineers should be transparent about the evidence and reasons behind all outputs by providing clear documentation of the underlying data sources and design decisions so that end-users can make informed decisions about the use of the system. Transparency enables the tracking of potential problems and facilitates the evaluation of AI systems by both internal and external teams. Transparency artifacts guide AI researchers and developers on the responsible use of the model, promote trust, and help end-users make informed decisions about the use of the system.

The following are some implementation suggestions:

- When building AI features with experimental models or services, it’s essential to highlight the possibility of unexpected model behavior so healthcare professionals can accurately assess whether to use the AI system.

- Consider publishing artifacts such as Amazon SageMaker model cards or AWS system cards. Also, at AWS we provide detailed information about our AI systems through AWS AI Service Cards, which list intended use cases and limitations, responsible AI design choices, and deployment and performance optimization best practices for some of our AI services. AWS also recommends establishing transparency policies and processes for documenting the origin and history of training data while balancing the proprietary nature of training approaches. Consider creating a hybrid document that combines elements of both model cards and service cards, because your application likely uses foundation models (FMs) but provides a specific service.

- Offer a feedback user mechanism. Gathering regular and scheduled feedback from healthcare professionals can help developers make necessary refinements to improve system performance. Also consider establishing policies to help developers allow for user feedback mechanisms for AI systems. These should include thorough instructions and consider establishing policies for any mechanisms for recourse.

Security by design

When developing AI systems, consider security best practices at each layer of the application. Generative AI systems might be vulnerable to adversarial attacks suck as prompt injection, which exploits the vulnerability of LLMs by manipulating their inputs or prompt. These types of attacks can result in data leakage, unauthorized access, or other security breaches. To address these concerns, it can be helpful to perform a risk assessment and implement guardrails for both the input and output layers of the application. As a general rule, your operating model should be designed to perform the following actions:

- Safeguard patient privacy and data security by implementing personally identifiable information (PII) detection, configuring guardrails that check for prompt attacks

- Continually assess the benefits and risks of all generative AI features and tools and regularly monitor their performance through Amazon CloudWatch or other alerts

- Thoroughly evaluate all AI-based tools for quality, safety, and equity before deploying

Developer resources

The following resources are useful when architecting and building generative AI applications:

- Amazon Bedrock Guardrails helps you implement safeguards for your generative AI applications based on your use cases and responsible AI policies. You can create multiple guardrails tailored to different use cases and apply them across multiple FMs, providing a consistent user experience and standardizing safety and privacy controls across your generative AI applications.

- The AWS responsible AI whitepaper serves as an invaluable resource for healthcare professionals and other developers that are developing AI applications in critical care environments where errors could have life-threatening consequences.

- AWS AI Service Cards explains the use cases for which the service is intended, how machine learning (ML) is used by the service, and key considerations in the responsible design and use of the service.

Conclusion

Generative AI has the potential to improve nearly every aspect of healthcare by enhancing care quality, patient experience, clinical safety, and administrative safety through responsible implementation. When designing, developing, or operating an AI application, try to systematically consider potential limitations by establishing a governance and evaluation framework grounded by the need to maintain the safety, privacy, and trust that your users expect.

For more information about responsible AI, refer to the following resources:

About the authors

Tonny Ouma is an Applied AI Specialist at AWS, specializing in generative AI and machine learning. As part of the Applied AI team, Tonny helps internal teams and AWS customers incorporate leading-edge AI systems into their products. In his spare time, Tonny enjoys riding sports bikes, golfing, and entertaining family and friends with his mixology skills.

Tonny Ouma is an Applied AI Specialist at AWS, specializing in generative AI and machine learning. As part of the Applied AI team, Tonny helps internal teams and AWS customers incorporate leading-edge AI systems into their products. In his spare time, Tonny enjoys riding sports bikes, golfing, and entertaining family and friends with his mixology skills.

Simon Handley, PhD, is a Senior AI/ML Solutions Architect in the Global Healthcare and Life Sciences team at Amazon Web Services. He has more than 25 years’ experience in biotechnology and machine learning and is passionate about helping customers solve their machine learning and life sciences challenges. In his spare time, he enjoys horseback riding and playing ice hockey.

Simon Handley, PhD, is a Senior AI/ML Solutions Architect in the Global Healthcare and Life Sciences team at Amazon Web Services. He has more than 25 years’ experience in biotechnology and machine learning and is passionate about helping customers solve their machine learning and life sciences challenges. In his spare time, he enjoys horseback riding and playing ice hockey.