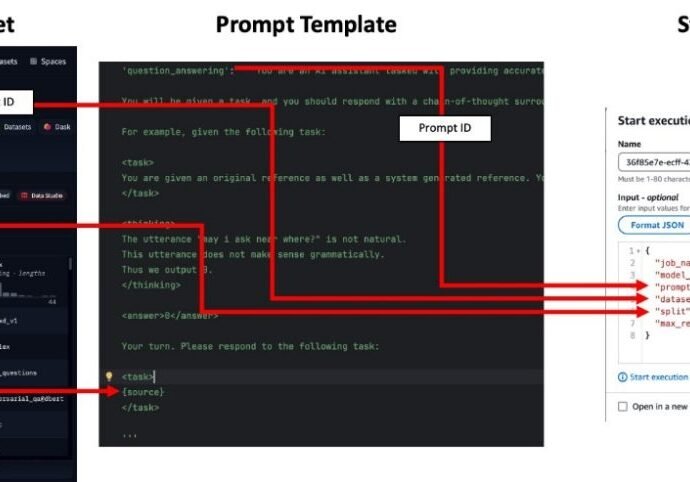

Implement automated monitoring for Amazon Bedrock batch inference | Amazon Web Services

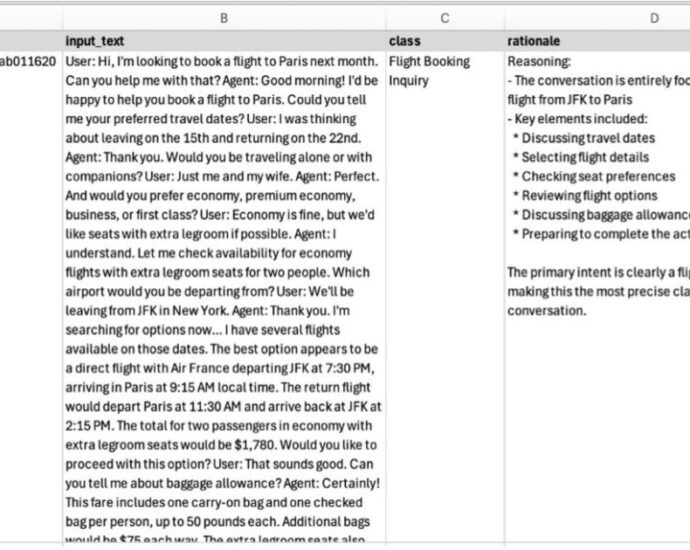

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies through a single API, along with capabilities to build generative AI applications with security, privacy, and responsible AI. Batch inference in Amazon Bedrock is for larger workloads where immediate responsesContinue Reading