Implementing tenant isolation using Agents for Amazon Bedrock in a multi-tenant environment | Amazon Web Services

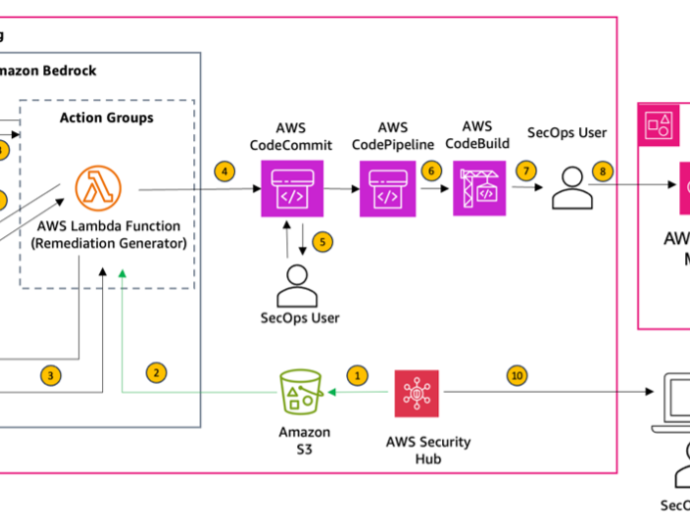

The number of generative artificial intelligence (AI) features is growing within software offerings, especially after market-leading foundational models (FMs) became consumable through an API using Amazon Bedrock. Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models from leading AI companies like AI21 Labs, Anthropic,Continue Reading