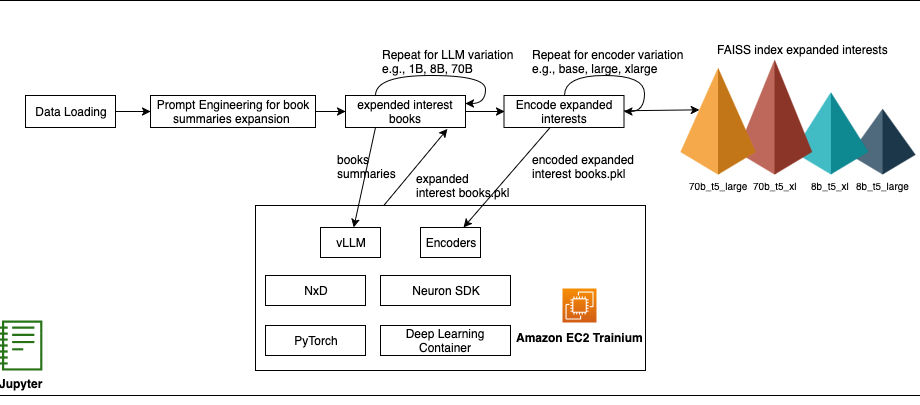

Boost cold-start recommendations with vLLM on AWS Trainium | Amazon Web Services

Cold start in recommendation systems goes beyond just new user or new item problems—it’s the complete absence of personalized signals at launch. When someone first arrives, or when fresh content appears, there’s no behavioral history to tell the engine what they care about, so everyone ends up in broad genericContinue Reading