Beyond the basics: A comprehensive foundation model selection framework for generative AI | Amazon Web Services

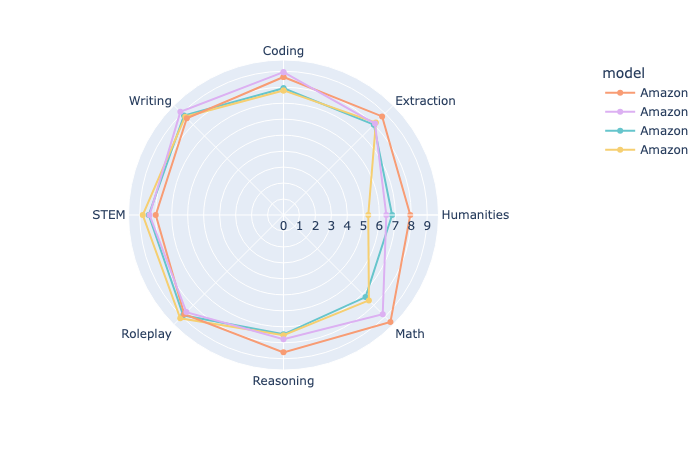

Most organizations evaluating foundation models limit their analysis to three primary dimensions: accuracy, latency, and cost. While these metrics provide a useful starting point, they represent an oversimplification of the complex interplay of factors that determine real-world model performance. Foundation models have revolutionized how enterprises develop generative AI applications, offeringContinue Reading