Ground truth generation and review best practices for evaluating generative AI question-answering with FMEval | Amazon Web Services

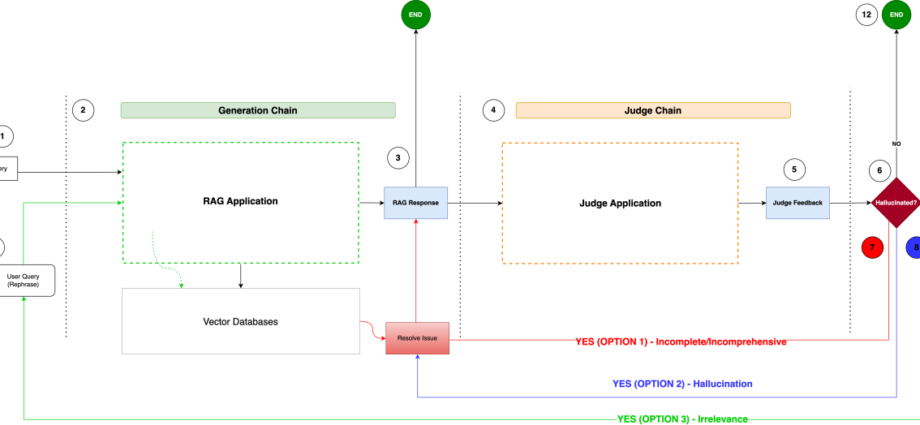

Generative AI question-answering applications are pushing the boundaries of enterprise productivity. These assistants can be powered by various backend architectures including Retrieval Augmented Generation (RAG), agentic workflows, fine-tuned large language models (LLMs), or a combination of these techniques. However, building and deploying trustworthy AI assistants requires a robust ground truthContinue Reading