How GoDaddy built a category generation system at scale with batch inference for Amazon Bedrock | Amazon Web Services

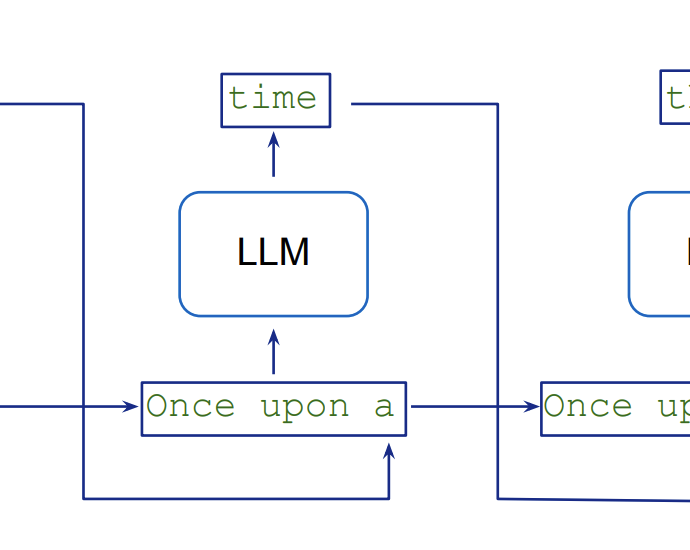

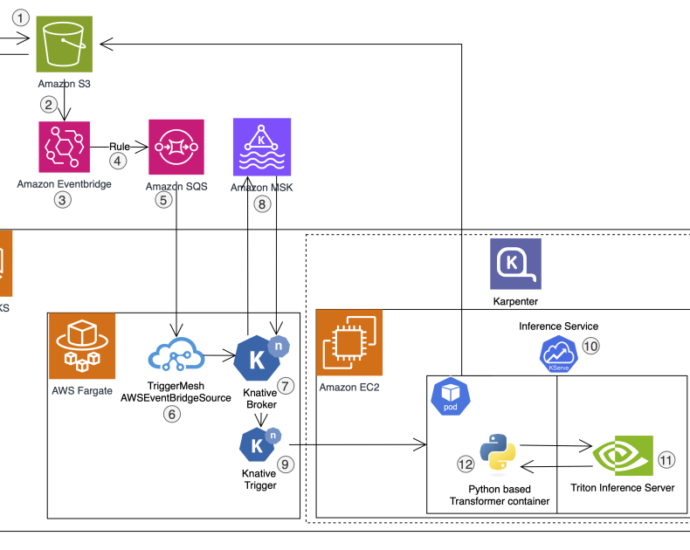

This post was co-written with Vishal Singh, Data Engineering Leader at Data & Analytics team of GoDaddy Generative AI solutions have the potential to transform businesses by boosting productivity and improving customer experiences, and using large language models (LLMs) in these solutions has become increasingly popular. However, inference of LLMsContinue Reading