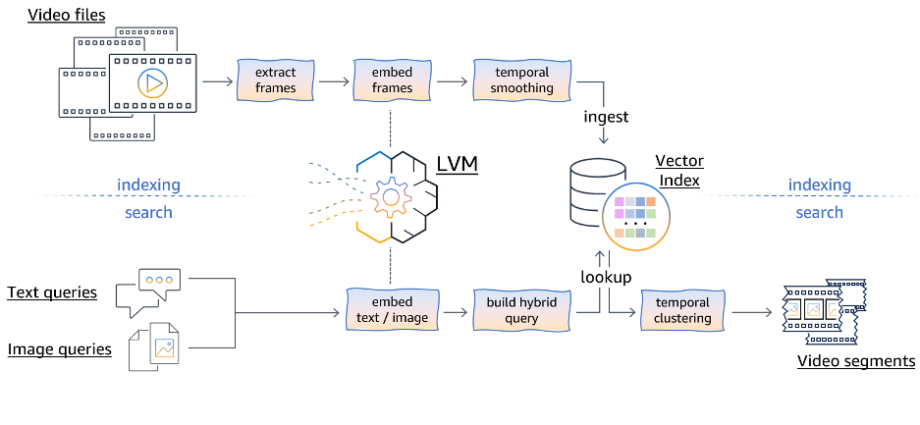

Implement semantic video search using open source large vision models on Amazon SageMaker and Amazon OpenSearch Serverless | Amazon Web Services

As companies and individual users deal with constantly growing amounts of video content, the ability to perform low-effort search to retrieve videos or video segments using natural language becomes increasingly valuable. Semantic video search offers a powerful solution to this problem, so users can search for relevant video content basedContinue Reading