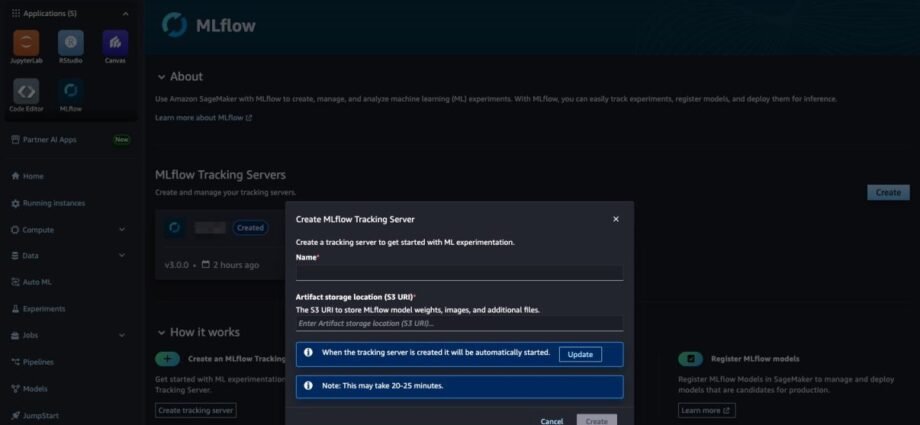

Accelerating generative AI development with fully managed MLflow 3.0 on Amazon SageMaker AI | Amazon Web Services

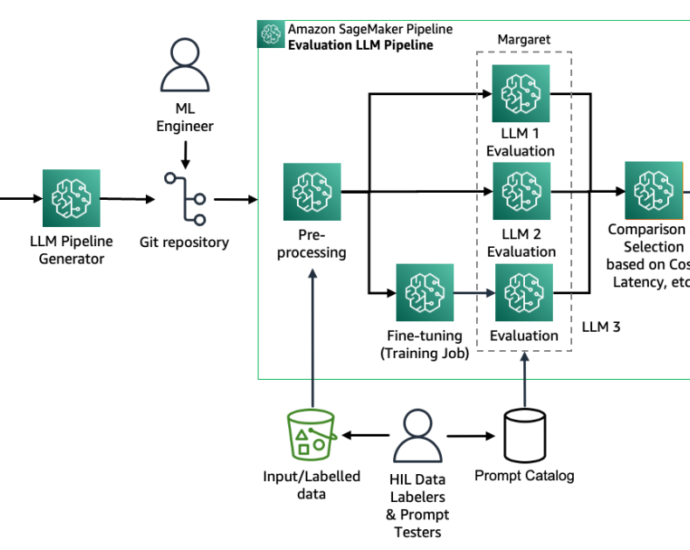

Amazon SageMaker now offers fully managed support for MLflow 3.0 that streamlines AI experimentation and accelerates your generative AI journey from idea to production. This release transforms managed MLflow from experiment tracking to providing end-to-end observability, reducing time-to-market for generative AI development. As customers across industries accelerate their generative AIContinue Reading