Hosting NVIDIA speech NIM models on Amazon SageMaker AI: Parakeet ASR | Amazon Web Services

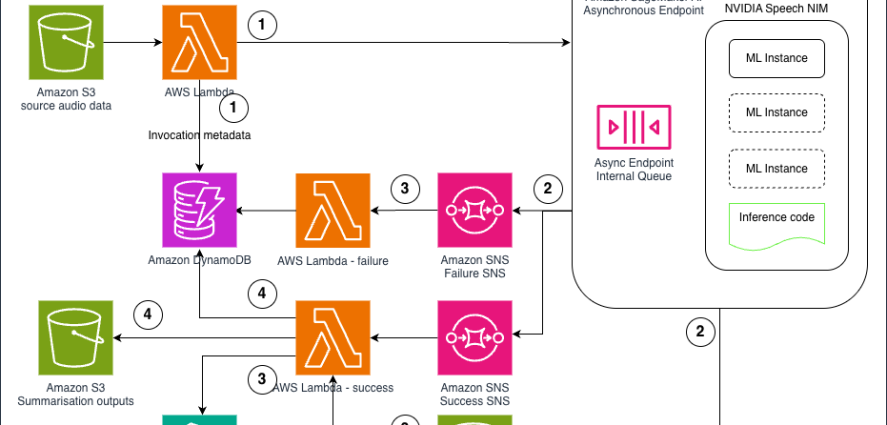

This post was written with NVIDIA and the authors would like to thank Adi Margolin, Eliuth Triana, and Maryam Motamedi for their collaboration. Organizations today face the challenge of processing large volumes of audio data–from customer calls and meeting recordings to podcasts and voice messages–to unlock valuable insights. Automatic SpeechContinue Reading