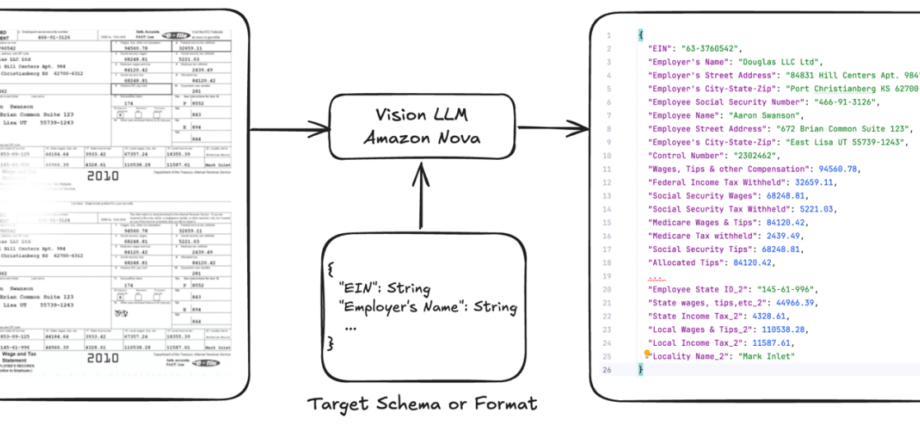

Optimizing document AI and structured outputs by fine-tuning Amazon Nova Models and on-demand inference | Amazon Web Services

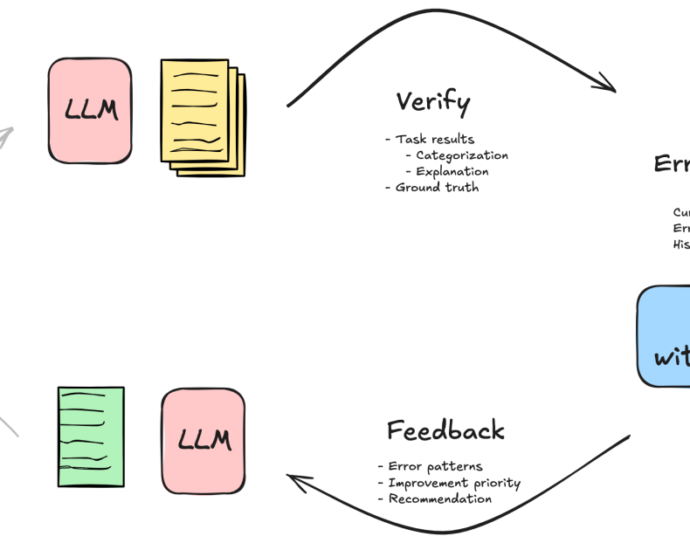

Multimodal fine-tuning represents a powerful approach for customizing vision large language models (LLMs) to excel at specific tasks that involve both visual and textual information. Although base multimodal models offer impressive general capabilities, they often fall short when faced with specialized visual tasks, domain-specific content, or output formatting requirements. Fine-tuningContinue Reading