Enhancing LLM accuracy with Coveo Passage Retrieval on Amazon Bedrock | Amazon Web Services

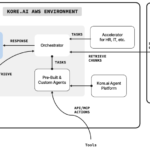

This post is co-written with Keith Beaudoin and Nicolas Bordeleau from Coveo. As generative AI transforms business operations, enterprises face a critical challenge: how can they help large language models (LLMs) provide accurate and trustworthy responses? Without reliable data foundations, these AI models can generate misleading or inaccurate responses, potentiallyContinue Reading