NeMo Retriever Llama 3.2 text embedding and reranking NVIDIA NIM microservices now available in Amazon SageMaker JumpStart | Amazon Web Services

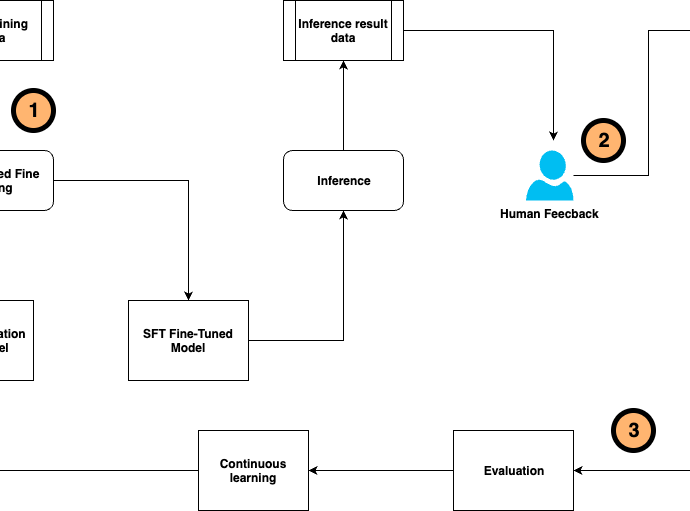

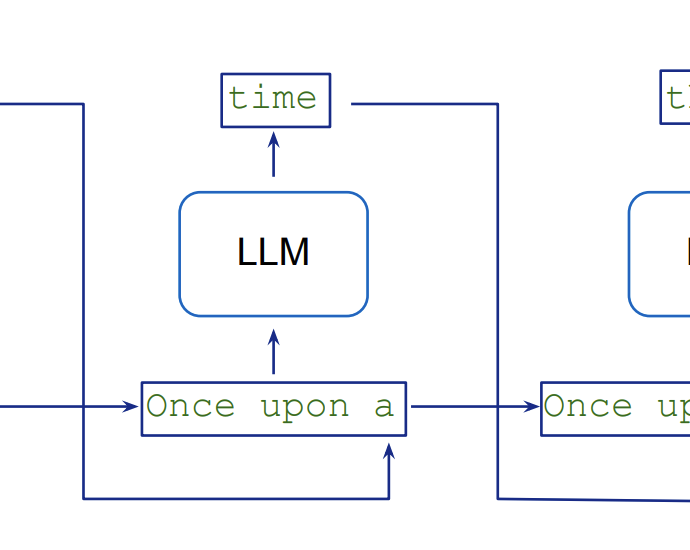

Today, we are excited to announce that the NeMo Retriever Llama3.2 Text Embedding and Reranking NVIDIA NIM microservices are available in Amazon SageMaker JumpStart. With this launch, you can now deploy NVIDIA’s optimized reranking and embedding models to build, experiment, and responsibly scale your generative AI ideas on AWS. InContinue Reading