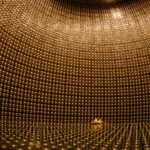

Black holes don’t just swallow light, they sing. And we just learned the tune

Black holes embody the ultimate abyss. They are the most powerful sources of gravity in the universe, capable of dramatically distorting space and time around them. When disturbed, they begin to “ring” in a distinctive pattern known as quasinormal modes: ripples in space-time that produce detectable gravitational waves. In eventsContinue Reading