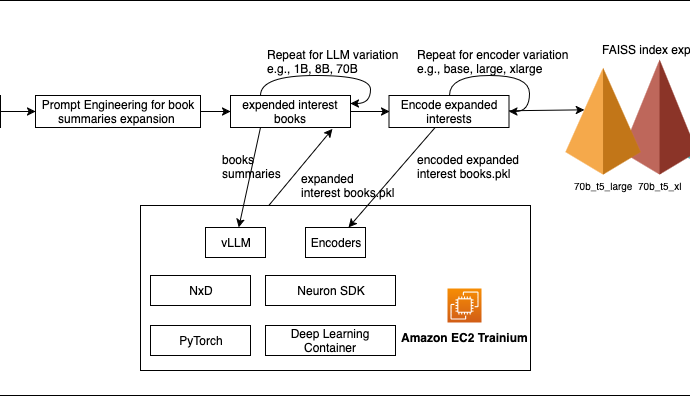

How Amazon scaled Rufus by building multi-node inference using AWS Trainium chips and vLLM | Amazon Web Services

At Amazon, our team builds Rufus, a generative AI-powered shopping assistant that serves millions of customers at immense scale. However, deploying Rufus at scale introduces significant challenges that must be carefully navigated. Rufus is powered by a custom-built large language model (LLM). As the model’s complexity increased, we prioritized developingContinue Reading